IDP Projects

We welcome students to complete their IDP at our chair. Feel free to send us your credentials (CV, transcript) together to the given e-mail address corresponding to the projects.

You can find the list of open IDPs below.

IDP: PEER AI-Wtiting Tool: Extension for Classroom and Homework Use

The PEER (Paper Evaluation and Empowerment Resource) is a tool developed at our department to assist students in improving their essay-writing skills. PEER is designed to support learners across all educational levels—from elementary school to university—by providing constructive feedback on various types of writing. The system analyzes student submissions through direct text input or uploaded images, using ChatGPT to generate personalized feedback and suggestions for improvement. To enhance PEER’s versatility and broaden its application, we are looking to expand the tool’s capabilities to serve additional key stakeholders: teachers and parents. For this purpose, we plan to develop two new interfaces. The first will enable teachers to create restricted links for classroom use and to gain insights into the learning needs of their students. The second will offer parents tailored features, such as multilingual support, to better assist their children with homework.

Prerequisites: Python, HTML, LLMs

Groups of up to 2 students are welcome! Please contact: babette.buehler@tum.de

IDP: Develop an AI-Powered Reading Companion for Elementary School Learners

The goal of this IDP is to develop a new tool aimed at enhancing reading comprehension and speed for elementary school children. This project focuses on creating a user-friendly platform where students can engage with AI generated texts that match their interests and skill levels, making the reading process both educational and enjoyable. The tool will include functionalities to support students while they practice reading, such as providing word explanations, pronunciation assistance and comprehension questions. It will also incorporate gamification aspects by awarding points based on reading time and comprehension, creating a motivating learning experience. If you are interested in developing this impactful educational tool as part of a interdisciplinary student project, we encourage you to reach out. Back- and front-end development skills and LMM and UX design experience are a plus. Groups of up to 2 students are welcome to apply!

For further information write an email to: babette.buehler@tum.de

(closed) IDP: Leveraging Generative AI Chatbots in VR Museums for Enhanced Educational Experiences

Generative AI (GenAI) has gained immense popularity, particularly for its applications in education. Within the virtual reality (VR) realm, where interactive environments are pivotal, GenAI, especially Large Language Models (LLMs), offers promising potential to enrich user experiences. An exemplary use case is the creation of immersive VR museums, where LLM-based chatbots serve as interactive guides. This project aims to propose, develop, and assess an immersive VR museum enhanced with LLM-based chatbots, employing advanced machine learning and eye-tracking technology.

Prerequisites: Unity/Unreal, Python, C#, Machine learning

Contact: hong.gao@tum.de

(closed) Intelligent VR learning environments for teacher training

The rapid advancement of virtual reality (VR) technologies has brought about significant transformations in the field of education. VR provides immersive and interactive learning environments that have proven to be highly effective in supporting skill development among learners. Moreover, VR can also serve as a valuable tool for enhancing the pedagogical strategies of teachers through targeted training programs. In this project, our main objective is to develop an intelligent VR learning platform specifically designed for training teachers in data literacy skills. The platform will incorporate interactive features, including gamification elements and a Chatbot supported by Generative AI, to create engaging and effective learning experiences. We are currently seeking bachelor/master students with a background in computer science and human-computer interaction who are interested in contributing to the development of such VR learning systems.

For further information write an email to: hong.gao@tum.de

Web Application Development for Distance Learning

The importance of remote systems, remote data collection, and distance learning has been well understood especially during the COVID-19 pandemic. With the additional focus on the developments in MOOCs etc., distant learning may become a norm supporting real classroom teaching soon. However, very little is known about the behaviors of the students during distance learning. This project is the first attempt to develop a web application that is aware of the behaviors of the learners during learning.

For further information, write an email to: efe.bozkir@tum.de

VR Classroom Design with Generative Models

Virtual Reality (VR) technology has revolutionized the way we learn and interact with information. In this project, we aim to design a VR classroom that leverages the power of generative behavior and text models to enhance the learning experience for students. The project is presumably suitable for students from computer science: games engineering, but also for all other students who have an interest in developing VR applications that integrate artificial intelligence.

For further information write an email to ozdelsuleyman@tum.de

IDP: ArtisanVR

Protecting cultural variety and human creativity, preserving and transmitting intangible cultural heritage (ICH) practices and knowledge is crucial. Innovative tools like large language models (LLMs) and virtual reality (VR) have been employed more and more to develop immersive learning environments that help spread knowledge. This IDP project looks into the pedagogical approaches and designs ideas that support the creation of successful VR and LLM-based learning environments for ICH.

For further information write an email to carrie.lau@tum.de.

Usable Privacy for Immersive Learning Settings

The importance of data privacy and security has recently been emphasized by various regulations such as GDPR or CCPA. With current developments in technology, the amount of human data collected from different tools and environments such as VR/AR has been growing and due to the biometric nature of such data, the tools that provide novel ways of interaction might be seen as privacy-invasive by the public. To address these issues and develop human-centered solutions and regulations, privacy concerns, preferences, and behaviors should be known. In this project, such aspects will be researched especially for immersive learning settings.

For further information write an email to: efe.bozkir@tum.de

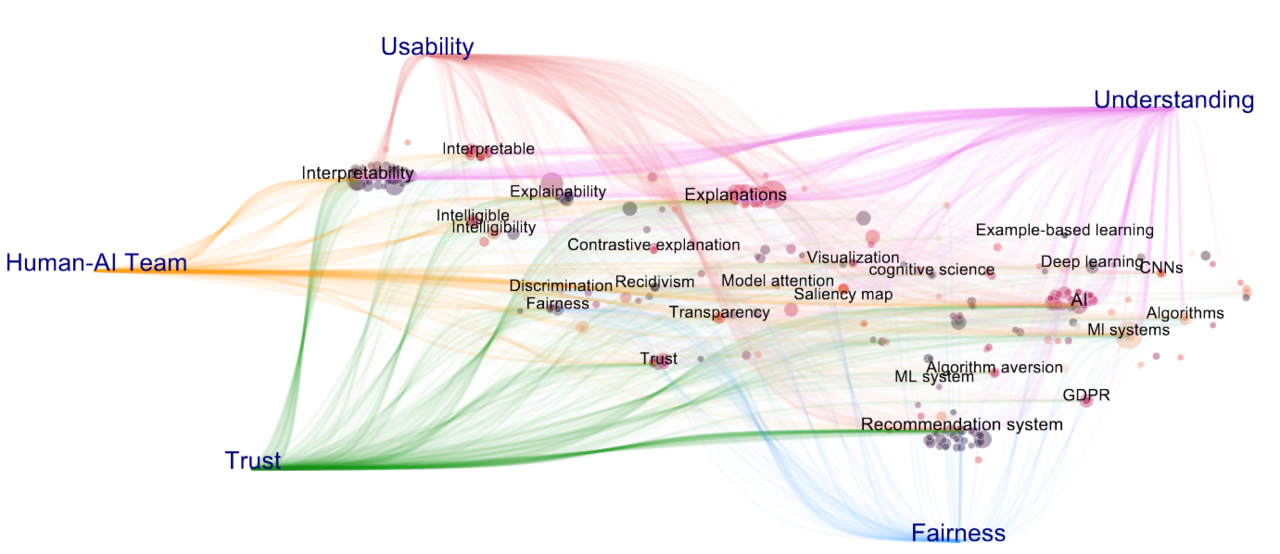

Human-centered XAI: Algorithm and User Experience

Explainable AI (XAI) is widely viewed as a sine qua non for ever-expanding AI research. A better understanding of the needs of XAI users, as well as human-centered evaluations of explainable models are both a necessity and a challenge. In this project, we explore how AI and HCI researchers design XAI algorithms to optimize the user experience when interacting with black-box machine learning models. Specifically, we discuss how XAI technology improves different aspects of ML models such as trustworthiness, fairness, usability, etc.

If you are interested in our project, please contact yao.rong@tum.de

Machine learning meets education and VR

Implementing a Virtual Reality Classroom using Unity with Multimodal Data Collection and Generative Models.

The objective of this task is to create a virtual reality classroom using Unity and integrate it with multimodal data collection features such as eye-tracking. Additionally, generative models will be used to create virtual avatars for teachers and students, providing a more personalized and immersive experience for the users.

For further information write an email to mengi.wang@tum.de.

Gaze-Guided Robotic Retrieval Assistant

In this project, you will be merging computer vision, robotics, and human-computer interaction to implement a gaze-guided robotic retrieval assistant. You will design the system architecture and implement 3rd person remote gaze tracking algorithms, integrating them with a robotic platform.

Through this interdisciplinary project, you will contribute to the evolution of assistive robotics, gaining invaluable skills in problem-solving and cutting-edge technologies.

Requirements: You must have a background in computer science or robotics

Contact: virmarie.maquiling@tum.de